This next Thursday is Whyday. Celebrate with some outragous coding.

What are you doing for Whyday?

This next Thursday is designated as Whyday, a day set aside to commemorate all the many wacky contributions of Why the Lucky Stiff to the Ruby community. How do you celebrate WhyDay? The WhyDay web page suggests:

- See how far you can push some weird corner of Ruby (or some other language).

- Choose a tight constraint (for example, 4 kilobytes of source code) and see what you can do with it.

- Try that wild idea you’ve been sitting on because it’s too crazy.

- You can work to maintain some of the software Why left us (although Why is more about creating beautiful new things than polishing old things).

- On the other hand, Why is passionate about teaching programming to children. So improvements to Hackety Hack would be welcome.

- Or take direct action along those lines, and teach Ruby to a child.

As for me, I have several ideas:

- Play with the HTML5 canvas, maybe writing a Ruby DSL for easily generating diagrams in Ruby.

- Play with some Grit (a Ruby/Git library) and see if I can categorize git commits into a swimlane structure.

- Combine the two ideas into program that generates a graphical swimlane representation (using an HTML5 canvas) of a git project history (similar to the hand drawn swimlanes in Vincent Driessen’s Article).

Those are just my ideas. And I reserve the right to change my mind at a moments notice.

So, what are you doing for whyday?

I’m switching from self-hosting Redmine to using Pivotal Tracker for issue tracking on my Open Source projects.

Switching to Pivotal Tracker

After running Redmine locally for a while, I’ve decided to switch to a hosted issue tracking service. I’ve moved all the open tickets on the onestepback.org Redmine app to my account on Pivotal Tracker.

Current Project Links

Here are the links to my current projects:

All the projects are marked public so you should be able to view the projects (and subscribe to an RSS feed) without actually signing up for anything.

Did I miss anything?

All the open tickets should be migrated to Pivotal Tracker. If you notice anything missing, let me know. Thanks.

I seemed to have accidently started a twitter storm debate on metaclasses in Ruby. Somethings are just are to say in 140 characters. So here’s my take on the issue.

Singleton Class / Eigenclass / Metaclass … What?

Ruby has this concept of per-class methods. In other words, you can define a method on an object, rather than on its class. Such per-object methods are callable on that object only, and not any other object in the same class.

Implementation wise, these per-object methods are defined in an almost invisible class-like object called the “Singleton Class”. The singleton class injects itself into the object’s method lookup list immediately prior to the object’s class.

Some people object to the name “singleton class”, complaining that it is easily confused with the singleton pattern from the Gang of Four book. Other suggested names are “Eigenclass” and “Metaclass”.

I objected to the use of the name “metaclass” for the singleton class on the grounds that metaclass has a well understood meaning in non-Ruby circles, and that the singleton class is not a metaclass.

So, What’s a Metaclass?

According to wikipedia:

In object-oriented programming, a metaclass is a class whose instances are classes. Just as an ordinary class defines the behavior of certain objects, a metaclass defines the behavior of certain classes and their instances.

I get two things out of this: (1) instances of metaclasses are classes, and (2) the metaclass defines the behavior of a class.

So Singleton classes aren’t Metaclasses?

No, not according to the definition of metaclass.

In general, instances of singleton classes are regular objects, not classes. Singleton classes define the behavior of regular objects, not class objects.

But Everybody Calls them Metaclasses!

I can’t change what everybody calls them. But calling a dog a horse doesn’t make it a horse. I’m just pointing out that the common usage of the term metaclass is contrary to the definition of metaclass used outside the Ruby community.

Does Ruby have Metaclasses?

Yes … I mean no … well maybe.

Is there something that creates classes in Ruby? Yes, the class Class is used to create new classes. (e.g. Class.new). All classes are instances of Class.

Is there something that defines the behavior of classes? Yes, any class can have its own behavior by defining singleton methods. The singleton methods go into the singleton class of the class.

Ruby doesn’t have a single “thing” that is a full metaclass. The role of a metaclass is split between Class (to create new classes) and singleton classes of classes (to define class specific behavior).

So, Singleton Classes Are Metaclasses?

You weren’t listening. Not all singleton classes are metaclasses. Only singleton classes of classes are metaclasses. And then only weak, partial metaclasses.

Does it Matter What We Call Them?

In the long run, probably not. Most people seem happy to (incorrectly) call them metaclasses, and this post is unlikely to change that behavior. Shoot, it seems the Rails team has already immortalized the term.

However, if reading this post has helped you understand what real metaclasses are, then it was worthwhile.

“Have I mentioned today how much git rocks?” — One of my office mates

I hear that spontaneous outpouring of appreciation for git about once a day. Usually it is someone in the office who just finished a task that would have been difficult with any of the source control systems we had used previously. Git has really impacted our day to day development process, and that’s the sign of a powerful tool.

It wasn’t always like this. I remember when EdgeCase made a rather abrupt switchover from subversion to git. I had only been dabbling with git at the time, but the guys in charge of our code repositories said “Here, this is good stuff. We’re switching … Now.”

Let me tell you, it was a little rough for a few days. Eventually we figured it out. Although we love the tool now, the learning curve was a bit steep to climb.

About two months ago we were talking in the office about git and how to encourage people to adopt it. We talked abou the need for a gentle introduction to git that quickly gets the user over the learning curve quickly. That gave me the idea for the “Souce Control Made Easy” talk that teaches the concepts behind git by starting from scratch developing the ideas behind git one by one.

A Pragmatic Screen Cast

Mike Clark of the Pragmatic Studio contacted me about turning the talk into a screencast that could reach a wider audience than the normal conference-going crowd. I’m happy to say that Source Control Made Easy is now available from the Prags.

If you are thinking about adopting git, or have already started using git but are still at the awkward stage, then this screen cast is design for you. The Pragmatic Studio page has a link to a preview of the screen cast. I hope you check it out.

Git doesn’t come with a merge tool, but will gladly use third party tools…

The Git Debate

The reoccurring debate on switching from svn to git is going on again on the Ruby Core mailing list. Amoung the many objections to git is that it doesn’t come with a nice merge tool. Perforce was held up as an example of a tool that does merging right. Although I’m not a big fan of perforce in general, the merge tool in perforce was one of its two redeeming aspects.

Now You Can Have Your Cake and Eat it Too!

Although it is correct that git doesn’t come with a nice merge tool, it is quite happy to use any merge tool that you have on hand. And since Perforce’s merge tool is available free (from here), you can use p4merge with git.

Just add the following to your .gitconfig file in your home directory:

[merge]

summary = true

tool = "p4merge"

[mergetool "p4merge"]

cmd = /PATH/TO/p4merge \

"$PWD/$BASE" \

"$PWD/$LOCAL" \

"$PWD/$REMOTE" \

"$PWD/$MERGED"

keepBackup = false

trustExitCode = false

Now, whenever git complains that a conflict must be resolved, just type:

git mergetool

When you are done resolving the merge conflicts, save the result from p4merge and then quit the utility. If git has additional files that need conflict resolution, it will restart p4merge with the next file.

Enjoy.

Interested in (not) Hearing about Git?

I’m doing a talk that’s not about git at the Ohio, Indiana, Northern Kentucky PHP Users Group (yes indeed, that acronym is OINK-PUG) on September 17th. Although the talk is explicitly not about git, you will come away from the talk with a much deeper understanding on what goes on behind the curtains with using git.

If there are other local groups interested in not hearing about git, feel free to contact me.

Update (6/Sep/09)

Several people have mentioned that it is not obvious where to get the p4merge tool from the perforce page. Go to the Perforce downloads page and click on the proper platform choice under “The Perforce Visual Client” section. When you download “P4V: The Visual Client”, you will get both the P4V GUI application and the p4merge application.

Oops, Another Update (7/Sep/09)

I forgot that the shell script that runs p4merge is something you have to create yourself. Here’s mine:

#!/bin/sh /Applications/p4merge.app/Contents/Resources/launchp4merge $*

There are more detais Andy McIntosh’s site

Try Jay’s demo at home.

Jay Phillip’s Adhearsion Demo at the Moutain West Ruby Conf

Jay Phillip’s talk at MWRC attempted to get the audience involved in actually running an Adhearsion demo on their own laptops. Unfortunately, the demo at MWRC was plagued with firewall and network problems, but eventually I was able to get it working. Here are the steps needed.

Go ahead, try this at home. It’s a lot of fun.

Step 1—Sign up for an Adhearsion account.

You can do that here: http://adhearsion.com/signup

You will need a skype account to complete the sign-up. After signing up, you should get an email with a link that you need to click before your account is activated. Go ahead and activate the account now.

Step 2—Install the Adhearsion Gem

Run:

gem install adhearsion

I’m running the 0.8.2 version of the gem.

Step 3—Create an Adhearsion project

Run:

ahn create project_name

Step 4—Enable the Sandbox

Run:

cd project_name ahn enable component sandbox

Step 5—Edit Your Credentials

Edit the file: components/sandbox/sandbox.yml and update the username and password you used when you created the Adhearsion account in step 1.

Step 6—Create a Dial Plan for the Sandbox

Edit the dialplan.rb file to contain the following:

adhearsion {

simon_game

}

sandbox {

play "hello-world"

}

The adhearsion section should alread be in the file. You will be adding the sandbox section.

Step 7—Star the Adhearsion Server

Run:

cd .. ahn start project_nameYou should see:

INFO ahn: Adhearsion initialized!

Errors at this stage might mean that your adhearsion account isn’t setup properly, you don’t have the right user name and password (in step 5), or that you have firewall issues preventing you from connecting to the Adhearsion server.

Step 8—Call The Sandbox

Using Skype, call the Skype user named sandbox.adhearsion.com. You should hear a hello world message.

Step 9—Change the Dial Plan

Just for fun, change the dialplan.rb file to contain:

adhearsion {

simon_game

}

sandbox {

play "hello-world"

play "tt-monkeys"

}

(Add the tt-monkeys line to the sandbox dial plan).

Now call the sandbox again (skyping user sandbox.adhearsion.com) to hear the change in the dial plan. Monkeys FTW.

More Examples

Here’s a example of what can be done in a dial plan. I was just goofing around with my dial plan.

adhearsion {

simon_game

}

sandbox {

play "vm-enter-num-to-call"

digits = input 1, :timeout => 10.seconds

case digits

when '1'

play "hello-world"

when '2'

play "tt-monkeys"

when '3'

play "what-are-you-wearing"

when '4'

play 'conf-unmuted'

when '5'

play 'tt-weasels'

when '6'

play "pbx-invalidpark"

when '7'

play "1000", "dollars"

when '8'

play "followme/sorry"

when '9'

simon_game

when '0'

play Time.now

else

play "demo-nomatch"

end

sleep 1

play "demo-thanks"

}

See http://adhearsion.com/examples for more dialplan examples.

That’s It

Think about what you are doing. You are calling the Adhearsion server and controlling how that remote server responds by the adhearsion program running on your own local box. That is wild.

The adhearsion sandbox makes it easy to play around with telephony programming without any investment in the associated hardware.

I hope this demo encourages you to give Adhearsion a try.

All Rails Conf 2009 speakers are invited to a special event.

Who?

Anyone speaking at RailsConf 2009

When?

Sunday, May 3, 4:00PM – 6:00PM

(The day before RailsConf 2009 officially begins)

Where?

Las Vegas Hilton in Pavilion 1

What?

Presentations for Presenters.

Why?

You’ve come all the way to Las Vegas to tell the world about your latest Ruby/Rails project or idea. You want to make sure that you really get your message across. So, how do you do that?

The Presentations for Presenters session will give you practical tips for improving your RailsConf presentation. We will cover all aspects of planning, preparing, creating and delivering your talk, so that your unique message will get across to your audience.

Plus we will have a lot of fun. Hope to see you there.

What do I need to do?

Start planning now to attend. Since this session is actually the day before RailsConf officially begins, make sure that your travel plans gets you there in time.

If you are speaking at RubyConf this year, we have a special opportunity for you.

Are You Speaking at RubyConf 2008?

If so, congratuations! And have we got a deal for you …

Wednesday evening, Nov 5, at 6:00 pm, (that’s the night before the conference) we are inviting all speakers to a special training session. I’m going to be sharing some ideas for putting together and delivering a good presentation.

After my talk, Patrick Ewing and Adam Keys are geared up to do some Powerpoint Karaoke with everyone there. I’m not even sure what Powerpoint Karaoke is, but it sounds like fun.

I hope to see everyone there.

Update (4/Nov/08)

I’ve talked to Adam today. He says that Patrick isn’t going to able to make RubyConf this year, but we will be ready to roll with Powerpoint Karaoke anyways.

Update (5/Nov/08)

It looks like the speakers training will be in the Olympic Room tonight. The Olympic Room is on the same floor as the registration desk. Go to the left past the elevators and turn right down that hall (or ask someone who looks like they know what they are doing).

I’ve received a lot of requests for my old articles …

The Article Section has been Restored

When I changed to my new hosting machine, I moved all my blog posts but didn’t move any of the articles. Of course I intended to move them eventually but never got around to it.

A lot of people have been asking for this article or that presentation, or pointing out that a number of old bookmarked links are no longer any good. So due to popular demand the Articles and Presentations section of onestepback.org is now restored.

Enjoy

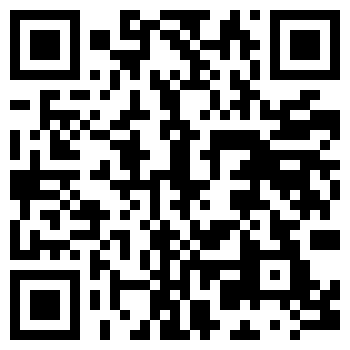

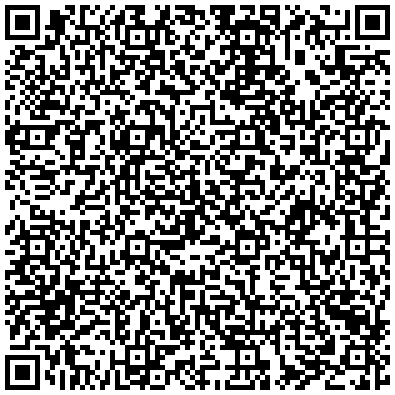

Aaron Patterson put a QR code into his RubyConf Brazil Presentation.

Aaron Patterson Shows the Way

At RubyConf Brazil last week, Aaron Patterson (@tenderlove) posted his contact information as a QR code as a slide in his presentation. How cool is that? I managed to snap a picture of it while it was on the screen and later downloaded a QR scan program for my iPhone to decode it.

QR codes are 2D bar codes that can easily be printed. You can encode URLs, phone numbers, SMS messages, or even just plain text in a QR code. Check out Wikipedia for more information.

To get started, grab a QR scanner for you phone. The AT&T scanner works pretty well and is available on a wide selection of phones.

QR Code Examples

To get you started, here’s a link to my twitter feed. If you are using the AT&T scanner, just start the program and aim the camera at the graphic below. After a moment, the scanner will automatically detect the QR code and offer to send you to the URL.

Next up, my contact information:

And finally, a little snippet of Ruby code. Does anybody recognized it?

Generating QR Codes

I used this generator site to generate the QR codes seen on this page.

Give it a try and have some fun!

(QR Code is registered trademark of DENSO WAVE INCORPORTATED)

After you copy an Ubuntu (or any Debian based) image, you'll probably lose your network connectivity. After a little bit of digging, it turns out that udev persists the MAC address of the network device in

/etc/udev/rules.d/*net.rules . The fix: sudo rm /etc/udev/rules.d/*net.rulessudo shutdown -r now #to reboot

I am in a lot of meetings. I am also on IM much if not all the time. Despite all the hoopla around Google Hangouts, Google Calendar doesn't support hangout/google talk reminders.

The chrome desktop notifications aren't quite what I need either: they don't give me a link straight into the hangout I am supposed to be in. Instead, when I click the link in them, it opens up Google calendar in the default week view, I have to scan to right now, find the meeting, click it and then click on the "Join hangout" link therein.

A couple of weeks ago I got fed up with this and decided to hack something together to make life easier. I added hangout link support to gcalcli (see my fork), wrote a script that parses the TSV output finding the links I care about in events coming up in the next 10 minutes, and then wired it up with sendxmpp and cron to send me an IM with the meeting title, start time and either a hangout link or a deep link to the event in google calendar.

(While I was at it, I also put together a mini life hack: a script that shows me all the events I have coming up in the next 4 hours.)

If you too want to get your calendar reminders via IM:

- Install my fork of gcalcli (master branch)

- Authorize gcalcli to your account by launching it -- it'll walk you through the oauth2 stuff including launching your browser

- Install sendxmpp

- Make your local install of sendxmpp work with the SSL requirements from google by editing and forcing RC4-MD5.

- Download/tweak to suit your needs: gcalcli_parse_tsv, cal_im_reminder and install a cron job

TL;DR:

Stick with SQL databases for now, and use whatever's easiest in whatever environment you're using. That's probably SQLite on your laptop, PostgreSQL if you're deploying to Heroku, and PostgreSQL or MySQL if you're deploying somewhere besides Heroku. And as soon as you deploy anything that has data that you care about, and will have multiple people using it, make sure to not be on SQLite.

More explanation below:

How much research should I be doing on my own?

Very little. If you're a complete newcomer to programming, you're going to have your hands full as it is. Your choice of database is not the first problem you have to solve.

SQL or NoSQL?

You may have heard of these new-fangled databases loosely called "NoSQL". They're cool, but they're a lot newer than SQL databases, which will cause you troubles when you're getting started. This isn't so much an issue of the technical merits of one style vs. another, but if you choose a NoSQL store that you're going to have an uphill climb with setup, installation, library support, documentation, etc.

SQL, on the other hand, is super-popular and might actually be older than you. That means that for every single SQL question you have, you're only a Google search away from an answer. Stick with SQL for now.

Which SQL database should I use?

There are three meaningful open-source SQL databases: SQLite, MySQL, and PostgreSQL. An over-simplified comparison might go like this:

- SQLite, as the name implies, is meant to be a very light implementation of SQL functionality. You can use it, say, if you're making a Mac application and need a good way to store structured data, but you don't want to go to the trouble of setting up a SQL server. This also makes it great for developing on your own machine, but since it's multi-connection functionality is limited, you're not going to be able to run an app on production with it.

- MySQL is a full-scale SQL server, and many large websites use it in production.

- PostgreSQL is also a full-scale SQL server, used by other large websites in production. This is actually my personal favorite, but that distinction doesn't matter when you're just getting started.

Any of these will get you what you need: A place to store your Rails data and a way to start learning SQL bit-by-bit. You should optimize for ease of installation or deployment. So that means:

- SQLite when you're working on your laptop

- PostgreSQL if you're deploying to Heroku, since Heroku favors PostgreSQL over MySQL

- PostgreSQL or MySQL if you're deploying somewhere else. Check their docs and use whatever they tell you to use.

And it's going to be fine, at first, to have the same app using different SQL engines in different environments. At some point you may end up using some really specific, optimized SQL that SQLite can't run, but that's not a newbie problem.

So I switched to Vim, and now I love it.

For years, I was actually using jEdit, of all things, even in the face of continued mockery by other programmers. My reasoning was well-practiced: TextMate didn't support split-pane, all the multi-key control sequences in Emacs had helped give me RSI, and Vim was just too hard to learn. jEdit isn't very good at anything, but it's okay at lots of things, and for years it was fine.

But eventually, I took on a consulting gig where I was forced to learn Vim. And, as so many have promised, once I got over the immensely difficult beginning of the learning curve, I was hooked.

Beneath the text, a structure

I'm now one of those smug Vim partisans, and one of the cryptic things we like to say is that Insert mode is the weakest mode in Vim. So what the hell does that mean? It means that if you accept the (lunatic, counterintuitive) idea that you can't just insert the letter * by typing *, what you get is that every character on the keyboard becomes a way to manipulate text without having to prefix it with Control, Cmd, Option, etc. (In fact, the letter * searches for the next instance of the word that is currently under the cursor.)

When you edit source code, or any form of highly structured text, this matters, because over the course of your programming career you're far more likely to spend time navigating and editing existing text than inserting brand new text. So the promise of Vim is that if you optimize navigating and editing over inserting, your work will go faster. After months of practice, it does feel like I edit text far more quickly--that hitting Cmd/Ctrl/etc less often compensates for the up-front investment of learning these highly optimized keystroke sequences.

But it's definitely a strange mindset. In most text editors, you think of the document as a casual smear of characters on a screen, to be manipulated in a number of ways that are all okay but never extremely focused. But Vim assumes you're editing highly structured text for computers, and in some ways it pays more attention to the structure than the characters. So after a while it feels like you're operating an ancient, intricate machine that manipulates that structure, and that the text you see on the screen is just a side-effect of those manipulations.

Investments

Is it worth the time? To answer that question you have to get almost existential about your career: How many more decades of coding do you have in front of you? If you're planning on an IPO next year and then you're going to devote the rest of your life to your true passion of playing EVE Online, then maybe keep using your current text editor. But for most of us, a few weeks or more of hindered productivity might be worth the eventual gains.

As is often the case, it's not about raw, straight-line speed--it's about fewer chances to get distracted from the task at hand. Nobody ever codes at breakneck speed for 60 minutes straight. But when you're in the middle of a really thorny problem, maybe you'd be better off with a tool that's that much faster at finding/replacing/capitalizing/incrementing/etc, which might give you fewer chances to get distracted from the problem you're actually trying to solve.

As somebody who's used Vim for a little less than a year, that's what it feels like to me. Most of the time. I have to admit that once in a while the Vim part of my brain shuts down and I stare at the monitor for a few seconds. Those moments are happening less and less, though.

Beginner steps

When it came to learning Vim, here's what worked for me:

Practical Vim

Drew Neil's Practical Vim is, uh, the best book about a text editor I've ever read. It does a great job of explaining the concepts embedded inside of Vim. I skim through this every few months to try to remember even more tips, and can imagine myself doing that for years.

MacVim

As Yehuda recommends, I started in MacVim and not just raw Vim. At first you should let yourself use whatever crutches you need--mouse, arrow keys, Cmd-S--to help you feel like you can still do your work. I agree with Yehuda that tool dogmatism is going to be counterproductive if it makes the beginning of the learning process so painful that you give up.

And I still use the arrow keys, and don't really buy the argument that it's ever important to unlearn those.

Janus

As with Emacs, a lot of the power of Vim is in knowing which plugins & customizations to choose. Yehuda and Carl's Janus project is a pretty great place to start for this. I'd install it, and skim the README so you can at least know what sorts of features you're adding, even though you won't use them all for some time.

vim:essentials

Practical Vim is great for reading when you're on the subway or whatever, but you'll need something more boiled-down for day-to-day use. For a while I had this super-short vim:essentials page open all the time.

Extra credit: One intermediate step

After I got minimally familiar with Normal mode, I started hating the way that I would enter Insert mode, switch to my browser to look up something, and switch back to Vim forgetting which mode I was in. I entered the letter i into my code a lot.

I suspect many Vim users just get used to holding that state in their head, or never leaving the Vim window without hitting Esc first, but I decided to simply install a timeout which pulls out of Insert mode after 4 seconds of inactivity:

au CursorHoldI * stopinsert

As explained in Practical Vim, the best way to think of Insert mode is as a precise, transactional operation: Enter Insert mode, edit text, exit Insert mode. The timeout helped me get into that mindset quickly, and live in Normal mode, which is the place to see most of the gains from Vim.

This is an intermediate step, and you shouldn't try it right away. If you're a beginner you're probably not going to benefit from being in Normal mode all the time--if anything the frustration would be likely to make you give up on it. But once Vim starts feeling less disorienting, and you're ready to really learn what Practical Vim has been telling you, I'd give this a try.

Hope that's helpful. And I hope that after a month or two of this, you become as smug and self-assured about your text editor as I am today.

Over time, technological progress makes it easier to write automated tests for familiar forms of technology.

Meanwhile, economic progress forces you to spend more time working with unfamiliar forms of technology.

Thus, the amount of hassle that automated testing causes you is constant.

My Goruco 2012 talk, "The Front-End Future", is now up. In it, I talk about thick-client development from a fairly macro point-of-view: Significant long-term benefits and drawbacks, the gains in fully embracing REST, etc.

I also talk a fair amount about the cultural issues facing Ruby and Rails programmers who may be spending more and more of their time in Javascript-land going forward. Programmers are people too: They have their own anxieties, desires, and values. Any look at this shift in web engineering has to treat programmers as people, not just as resources.

Francis Hwang - The Front-End Future from Gotham Ruby Conference on Vimeo.

As always, comments are welcome.

What does roof laying and machine learning have in common?

At Triposo we have a database of restaurants that we've collected from various open content sources. When we display this list in our mobile travel guides one of the key pieces of information for our users is what cuisine the restaurant has. We often have this available but not all the time.

Sometimes you can guess the cuisine just by looking at the name:

- "Sea Palace" - probably chinese.

- "Bavarian Biercafe" - probably german.

- "Athena" - probably greek.

A useful technique you will run into very quickly as soon as you start working with machine learning is what is popularly known as shingles. (Referring to roof shingles not the disease.)

Shingles in machine learning is the set of overlapping character n-grams produced from a string of characters. It's common to add a start and end indicator to the string so that characters at the start and end are treated specially. Hopefully this diagram explains how to produce the shingles from a string.

Shingles are easily generated with a one-liner Python list comprehension (n is the size of each n-gram, n=4 often works well):

[word[i:i + n] for i in range(len(word) - n + 1)]

Shingles are great when you want to get a measure of how similar two strings of characters are. The more n-grams that are shared, the more similar the strings are. Since you can use hashing to look up instead of slightly more expensive trees this is an important tool when you're working with really large quantities of data. At Google O(1) rules.

Let's use shingles to guess the cuisine of restaurants given its name.

First of all let's index all the restaurant names where we know the cuisine. We want to know how likely it is that a particular 4-gram has a particular cuisine.

We start with a hash map from 4-gram to observed cuisine probability (in Python collections.Counter is just great for this). We iterate through all the restaurants and for every 4-gram shingle we bump up that cuisine in the corresponding probability distribution.

Right now we have an association to 4-gram to number of observations but we want to work with probabilities, number of observations is irrelevant. So let's also normalize each histogram. This prevents common restaurants like American and Chinese to score really highly for common n-grams. (This was a problem I initially had.)

So our model looks something like this:

'bier' => {'german': 0.37, 'currywurst': 0.06, ...}

'{ath' => {'greek': 0.68, 'american': 0.04, 'pizza': 0.04, ...}

...

Then to guess the cuisine of a restaurant name we produce the 4-gram shingles and then add together the probability distribution for the cuisines for each 4-gram.

lookup('bavarian biercafe')

[('german', 2.28), ('italian', 1.17), ('cafe coffee shop', 0.95)]

lookup('athena')

[('greek', 2.64), ('pizza', 0.37), ('italian', 0.36)]

The highest scored cuisine is our best guess but the bigger difference it is to the second score the higher confidence we have.

The neat thing with this technique is not only that it grows linearly with the size of the learning set and the size of the inputs. It's also really easy to parallelize. This is why it's so popular when you're working at "web scale".

So, should we use this in our guides? I'm not entirely convinced, for high confidence guesses we are usually correct but a human is also very good at guessing the cuisine. That said, it provided a nice little example of the type of processing we do in our pipeline.

I don't think there's a new tech bubble (yet).

I'll probably regret arguing against The Economist but I have to say I agree with Marc Andreessen here. There isn't a bubble as such, it's just a simple matter of economy 101: price is defined by supply and demand. There's a big demand for investing in the new "social media" technology companies but there's almost no supply. High demand + low supply = high price.

But don't get me wrong here: LinkedIn trading at several thousand times earning is still over-valued. Facebook is trading at several hundred times earnings but chances are still high they will "pop" at the IPO and probably increase in value several times. Does that mean I would invest my own money in these companies? Hell no, I'm coming in way too late. All the capital that wants to invest in this strong new technology trend needs to go somewhere and so far LinkedIn is the only stock it can go. At some point more companies will be going on the market and it will start getting easier to invest, supply is starting to meet demand and the valuations of these companies will start going down.

What about all these startups with crazy valuations? Same thing. There is no big company there to mop up all the surplus capital which means that early investment rounds is getting over subscribed. Start ups can ask for higher valuations and still get the capital they need.

I still don't think this is a bubble. A few over-valued companies is not enough to create a bubble in the same sense as the one in the late 90ies (I was there). These sort of bubbles have a very significant impact on the world economy. I doubt this will happen now.

In fact, I am investing myself. I left a huge salary and a lot of unvested shares on the table when I left Google to start Triposo. I have no salary and no idea whether it's going to work out.

Why invest? I think we are at a flexion point in this industry where a lot of interesting things will happen. It's not only about technological developments: what we're seeing now is mainstream adoption of things that's been around for quite some time. At Triposo we're primarily betting on powerful mobile devices and high quality open content. As technologies these things aren't exactly new but have significantly disrupted the industry by going mainstream (via the iPhone and Wikipedia).

NOTE: I don't keep Ruby Switcher updated anymore. Use RVM instead.

Chad mentioned that he'd gotten ruby 1.9.1 and 1.8.6 side by side on his workstation (his code for this is in his awesome spicy-config repo).

I took some time this morning to get a similar setup working. Now, on my prompt, I type, use_ruby_186 or use_ruby_191 to go back and forth between the Ruby 1.8.6 shipped with Leopard and a self-compiled install of ruby 1.9.1.

Steps for you to get there:

- Compile and install ruby 1.9.1:

mkdir -p ~/tmp cd ~/tmp curl -O ftp://ftp.ruby-lang.org/pub/ruby/1.9/ruby-1.9.1-p0.tar.gz tar xzf ruby-1.9.1-p0.tar.gz cd ruby-1.9.1-p0 ./configure --prefix=$HOME/.ruby_versions/ruby_191 make make install

- Install the ruby switcher:

curl -L http://github.com/relevance/etc/tree/master%2Fbash%2Fruby_switcher.sh?raw=true?raw=true > ~/ruby_switcher.sh echo "source ~/ruby_switcher.sh" >> ~/.bash_profile

Note that I use ~/.gem for my gems. If you don't do that, you'll have to modify the switcher to specify your GEM_HOME. Type gem env and look for GEM PATHS to figure out what you should set it to.

Today is my last day at Google.

Today is my last day at Google.

I joined Google about 3 years ago and most of that time I worked as one of the tech leads of Google Wave. It was fun, exciting and slightly crazy. We went from being the most hyped product on the web to slightly ridiculed. Then we were cancelled...

After Wave I worked for a little while on the Maps API until my 20% project Shared Spaces took off and became my 100% project. It was fun for a little while but we've now transitioned it to another team.

Me and Douwe had been playing around with this idea for a start up for a little while and we felt it was probably as good a time as any to have a go at it. Together with Douwe's two brothers we're starting Triposo. We're building travel guides for mobile phones. We crawl open content on the web, merge, score and generally massage it in a pipeline (running on our rather beefy workstations under our desks at home currently) then publish it to iPad, iPhone and Android (Windows Phone 7 to come). Here is for example our Barcelona guide in the App Store and in the Market. They're not bad actually and we've barely started!

I'm also doing another start up. My son Samir was born last friday (he's a week old today!) so here's the mandatory new-parent gratuitous baby shot:

Those of you who've found value in my last couple of bash specific posts may also like the latest addition to my

~/.bash_profile.

This one iterates through the one or more directories and creates aliases to the subdirectories so I don't have to. Here's the scenario: I've got a directory ~/work/ where I keep work projects, a ~/writings/ where I keep all the writing projects and so on. I used to have aliases to each subdirectory. e.g. alias project1="cd /Users/muness/work/project1". With shame, I admit that I maintained each of these manually. No more!

Install instructions:

curl -L http://github.com/relevance/etc/tree/master%2Fbash%2Fproject_aliases.sh?raw=true?raw=true > ~/.project_aliases.sh

echo "source ~/. project_aliases.sh" >> ~/.bash_profile

Usage instructions:

- Move your work projects to

~/work. - Above the

source ~/. project_aliases.shaddPROJECT_PARENT_DIRS[0]="$HOME/work".

You may be interested in my blog post over at PragMactic OS-Xer where I describe my motivation for these recent shell scripts.

Uncle Bob delivered a compelling keynote at RailsConf last week that put forth the argument that what we need most in programming is more professionalism. I loved the delivery, but I disagree with the conclusion.

I originally never wanted to be a programmer exactly because I thought the only type of programmers that existed where the kind that Bob talked about: The engineers with the proud professional practices that never wavered under pressure.

While I deeply respected that stature, it just never felt like a place that I belonged. I didn't identify with the engineering man or the seriousness of the efforts he pursued. Before I discovered Ruby, I felt in large parts that I was just faking my way along in this world. Here at a brief time for rent.

But that all changed when I found Ruby and a community consisting as much of artists as engineers. People waxing lyrically about beautiful code and its sensibilities. People willing to trade the hard scientific measurements such as memory footprint and runtime speed for something so ephemeral as programmer happiness.

That's where I found an identity that I could finally relate to directly. That's when I finally got really passionate about what I do for a living and started to blossom my own participation.

Now the wonderful thing about this new age of programming is that we need and prosper from both types of programmers. I believe Ruby is such a fantastic community and platform exactly because both types are coming together and sharing with each other. The bazaar is so much richer when the cultural backgrounds of the participants are diverse.

So while I love the idea of Bob's green wristband that reminds him always to do test-first development and his own personal professional oath, that level of adherence has never worked for me. I never had the discipline it takes to fulfill such a lofty goal of professionalism.

Now Bob may think that there's no place for people like me in programming (I sure know plenty of people who do!), I obviously think that would be a mistake. Not just because I've grown rather fond of what I do, but because I've seen so many other unprofessionals just like me come in and add those delicious twists that can really change things.

We aren't perfect. We often swear, act unresponsively, and can certainly be characterized as unprofessional a lot of the time. But I think that together with professionals like Bob, we stand a good chance leaving the world of programming in a better condition than we found it.

Now that the internet hysteria is dying down, I'd love to explore some of the more concrete things that we could do to actually get more women involved. As I've stated earlier, I doubt simply refraining from having saucy pictures of pole dancers is going to do the trick. If that was all it'd take, it'd be easy beans.

There's going to be a session called Women in Rails at RailsConf next Tuesday, which is bound to be focused a lot of this, but there'll undoubtedly be a lot of good ideas outside of that group as well that we shouldn't wait to get going on. Here are a few ideas to get started with:

Share your discovery story

For the women already in Rails, it'd be great to hear what in particular attracted you to the platform. Highlighting areas of the ecosystem that could get even more support. Perhaps there was an especially well-written introduction that just made everything click. Perhaps a screencast or an interview or something else.

Highlight local communities with women

There are a bunch of local Rails user groups all over the place. If women could get an idea of which groups already had other women present there, it'd probably be a less daunting thought to attend. Knowing that there's at least going to be one other woman in attendance could help a bunch.

Can we pair up with other communities?

Programming communities may indeed often be awfully short on women, but programmers interact with plenty of other professions that are not. I wonder if there are ways where we could get women from, for example, the design community to intermingle on projects like Rails Rumble day. Sorta how the police academy and the nurses in training always throw joint parties in Denmark.

I'd love to hear more and would be more than happy to help promote and push it. Despite the spasm over that one talk and the underlying differences of opinion exposed by it, there's no reason we can't use this as a jumping point to do something about the actual, core issue.

So either email, tweet, or blog your suggestions and stories and I'll use this space to help point it out. Let's treat the low number of women in the community as a bug, cut-out most of the needless bluster, and work on some actual patches. Onward and upwards!

I just can't get into the argument that women are being kept out of programming because the male programmer is such a testosterone-powered alpha specimen of our species. Compared to most other male groups that I've experienced, the average programmer ranks only just above mathematicians in being meek, tame, and introverted.

When I talk to musicians, doctors, lawyers, or just about any other profession that has a fair mix of men and women, I don't find that these men are less R rated than programmers and that's scaring off women from these fields. Quite the contrary in fact.

When I sit down with any of these groups, I usually find that I'm the one blushing. Yet that atmosphere some how doesn't keep women from joining any of these fields. It's from that empirical observation that I draw the conclusion that this argument is just bullshit.

Now that doesn't mean the underlying problem isn't worth dealing with. It absolutely is! I think that the world of programming could be much more interesting if more women were part of it. I wish I knew how to make that happen. If I find out, I'll be the first to champion it.

But in the mean time I don't think we're doing anyone a service by activating the WON'T SOMEBODY THINK OF THE CHILDREN police and squash all other sorts of edges and diversity in the scene.

You certainly have to be mindful when you're working near the edge of social conventions, but that doesn't for a second lead me to the conclusion that we should step away from all the edges. Finding exactly where the line goes — and then enjoying the performance from being right on it — requires a few steps over it here and there.

I've found that the fewer masks I try to wear, the better. This means less division between the personality that's talking to my close personal friends, socializing with my colleagues, and for interacting with my hobby or business worlds.

Blending like this isn't free. You're bound to upset, offend, or annoy people when you're not adding heavy layers of social sugarcoating. I choose to accept that trade because my personal upside from congruence is that I find more energy, more satisfaction, and more creativity when the bullshit is stripped away.

This means that it leaks out that I love listening to Howard Stern, that Pulp Fiction is one of my favorite movies, that I laugh out loud at Louis CK's Bag of Dicks joke, that I whole-fully accept my instinctual attraction to the female body, that I think drugs should be legal, that I really like the word fuck and other gems of profanity, and on and on.

Now I get that lots of people find much of that crude and primitive. I'm okay with that. I do take offense when yet another lame stereotype is thrown out (like that porn by definition is misogynistic), but I've learned to deal with that.

What I'm not going to do, though, is apologize for any of these preferences and opinions. I'm happy to be an R rated individual and I'm accepting the consequences of the leakage that comes from that. If you can deal with that, I'm sure we're going to get along just fine.

On one of the projects we're working on, we needed to occassionaly generate SQL from our migrations. Déjà vu, I thought. A few minutes of Googling later, I remembered why: Jay and I had been on a project a couple of years ago whence we had the same need. Jay's code didn't quite work any more due to some ActiveRecord changes, and a search for an alternative implementation turned up nothing.

I took his code and modified it to our needs. A short time later, I had the code pulled out into a rails plugin, migration_sql_generator. Install it (script/plugin install git://github.com/muness/migration_sql_generator.git) and then run the rake task:

rake db:generate:migration_sql

Running this task generates two sql files per migration in db/migration_sql in the form 20090216224354_add_users.sql and 20090216224354_add_users_down.sql.

I've used the plugin with success using the sqlserver adapter, less luck with the mysql adapter (change_column and rename_column blow up because the mysql adapter checks for the presence of a column first) and no luck with the sqlite adapter. Haven't tried it with the postgres adapter.

Last week I figured out a way to make my life a little bit easier by abstracting the scm I was using and having the prompt indicate whether I was in a Subversion or Git. Thanks to Mike Hommey whose script I tweaked for my needs.

For a long time, I've wanted my iTerm tab title to be more useful. I started by having it display the last process I executed in that tab (this also shows the full path in iTerm's window title bar):

PS1='\[\e]2;\h::\]${PWD/$HOME/~}\[\a\]\[\e]1;\]$(history 1 | sed -e "s/^[ ]*[0-9]*[ ]*//g")\a\]\$ '

Next up I wanted to show the currently running command. Google showed me how:

trap 'echo -e "\e]1;$BASH_COMMAND\007\c"' DEBUG

Instead of trying to explain this code I'll quote the Bash man page:

BASH_COMMAND

The command currently being executed or about to be executed, unless the shell is executing a command as the result of a trap, in which case it is the command executing at the time of the trap.

Some more tweaks followed:

- Distinguish between a previously executed command and a currently executing command by decorating them (I chose surrounding the former with braces and the latter with >/<. e.g.

[ls] and >vi<). - Show the context of the executing command in the tab. This is just the repository where I executed the command.

- Make this coexist with TextMate.

And a screenshot to summarize:

The code is in github/relevance/etc/tree/master/bash/bash_vcs.sh.

Install instructions:

curl -L http://github.com/relevance/etc/tree/master%2Fbash%2Fbash_vcs.sh?raw=true > ~/.bash_dont_think.sh

echo "source ~/.bash_dont_think.sh" >> ~/.bash_profile

Enjoy! Tested with iTerm on Leopard.

If you use MySQL, odds are you need Maatkit whether you know it or not. It's the Swiss army knife: I mostly use it to parallelize backup and restore of huge databases on multi-core machines, but it's also handy for fake splitting large files, executing sql on multiple tables and a whole lot more. (You also want mytop if you're wondering what's up with MySQL connections.)

Here's the install script if you too want Maatkit at your fingertips (and you do. trust me.):

#!/bin/sh

# tested on Leopard with MySQL installed using the package installer

sudo perl -MCPAN -e 'install DBI::Bundle'

sudo perl -MCPAN -e 'install DBD::mysql'

# you may need to force the install as follows:

# sudo perl -MCPAN -e 'force install DBD::mysql'

mkdir -p ~/tmp

cd ~/tmp

curl -O http://maatkit.googlecode.com/files/maatkit-2725.tar.gz

tar xzvf maatkit-2725.tar.gz

cd maatkit-2725

perl Makefile.PL

sudo make install

The flow of Merb ideas into Rails 3 is already under way. Let me walk you through one of the first examples that I've been working on the design for. Merb has a feature related to Rails' respond_to structure that works for the generic cases where you have a single object or collection that you want to respond with in different formats. Here's an example:

class Users < Application

provides :xml, :json

def index

@users = User.all

display @users

end

end

This controller can respond to html, xml, and json requests. When running display, it'll first check if there's a template available for the requested type, which is often the case with HTML, and otherwise fallback on trying to convert the display object. So @users.to_xml in the result of a XML request.

The applications I've worked on never actually had this case, though. I always had to do more than just convert the object to the type or render a template. Either I needed to do a redirect for one of the types instead of a render or I need to do something else besides the render. So I never got to spend much time with the default case that's staring you in the face from scaffolds:

class PostsController < ApplicationController

def index

@posts = Post.find(:all)

respond_to do |format|

format.html

format.xml { render :xml => @posts }

end

end

def show

@post = Post.find(params[:id])

respond_to do |format|

format.html

format.xml { render :xml => @post }

end

end

end

Cut duplication when possible, give full control when not

But the duplication case is definitely real for some classes of applications. And it's pretty ugly. The respond_to blocks are repeated for index, show, and often even edit. That's three times a fairly heavy weight structure. This is where the provides/display setup comes handy and zaps that duplication.

For Rails 3, we wanted the best of both worlds. The full respond_to structure when you needed to do things that didn't map to the generic structure, but still have the generic approach at hand when the circumstances were available for its use.

Dealing with symmetry and progressive expansion in API design

There were a couple of drawbacks with the provides/display duo, though, that we could deal with at the same time. The first was the lack of symmetry in the method names. The words "provides" and "display" doesn't reflect their close relationship and if you throw in the fact that they're actually both related to rendering, it's gets even more muddy.

The symmetry relates to another point in API design that I've been interested in lately: progressive expansion. There should be a smooth path from the simple case to the complex case. It should be like an Ogre, it should have layers. Here's what we arrived at:

class PostsController < ApplicationController

respond_to :html, :xml, :json

def index

@posts = Post.find(:all)

respond_with(@posts)

end

def show

@post = Post.find(params[:id])

respond_with(@post)

end

end

This is the vanilla provides/display example, but it has symmetry in respond_to as a class method, respond_with as a instance method, and the original respond_to blocks. So it also feels progressive when you unpack the respond_with and transform it into a full respond_to if you suddenly need per-format differences.

The design also extends the style to work just at an instance level without the class-level defaults:

class DealsController < SubjectsController

def index

@deals = Deal.all

respond_with(@deals, :to => [ :html, :xml, :json, :atom ])

end

def new

respond_with(Deal.new, :to => [ :html, :xml ])

end

end

It's quite frequent that the index action has different format responsibilities than the new or the show or whatever. This design encompasses all of those scenarios.

Yehuda has also been interested in improving the performance of respond_to/with by cutting down on the blocks needed. Especially when you're just using respond_with that doesn't need to declare any blocks at all.

All in all, I think this is a great example of the kind of superior functionality that can come out of merging ideas from both camps. We're certainly excited to pull the same trick on many other framework elements. I've been exploring how a revised router that imports the best ideas from both could look and feel like. I'll do a write-up when there's something real to share.

It seems that we thoroughly caught the interwebs with surprise by announcing that Merb is being merged into Rails 3. 96% of the feedback seems to be very positive. People incredibly excited about us closing the rift and emerging as a stronger community.

But I wanted to take a few minutes to address some concerns of the last 4%. The people who feel like this might not be such a good idea. And in particular, the people who feel like it might not be such a good idea because of various things that I've said or done over the years.

There's absolutely no pleasing everyone. You can't and shouldn't try to make everyone love you. The best you can do is make sure that they're hating you for the right reasons. So let's go through some of the reasons that at least in my mind are no longer valid.

DHH != Rails

I've been working on Rails for more than five years. Obviously I've poured much of my soul, talent, and dedication into this. And for the first formative years, I saw it as my outmost duty to ensure the integrity of that vision by ruling with a comparably hard hand. Nobody had keys to the repository but me for the first year or so.

But I don't need to do that anymore — and haven't for a long time. The cultural impact of what is good Rails has spread far and wide and touched lots of programmers. These programmers share a similar weltanschauung, but they don't need to care only about the things that I care about. In fact, the system works much better if they care about different things than I do.

My core philosophy about open source is that we should all be working on the things that we personally use and care about. Working for other people is just too hard and the quality of the work will reflect that. But if we all work on the things we care about and then share those solutions between us, the world gets richer much faster.

Defaults with choice

So let's take a concrete example. Rails ships with a bunch of defaults. For the ORM, you get Active Record. For JavaScript, you get Prototype. For templating, you get ERb/Builder. And so on and so forth. Rails 3 will use the same defaults.

I personally use all of those default choices, so do many other Rails programmers. The vanilla ride is a great one and it'll remain a great one. But that doesn't mean it has to be the only one. There are lots of reasons why someone might want to use Data Mapper or Sequel instead of Active Record. I won't think of them any less because they do. In fact, I believe Rails should have open arms to such alternatives.

This is all about working on what you use and sharing the rest. Just because you use jQuery and not Prototype, doesn't mean that we can't work together to improve the Rails Ajax helpers. By allowing Rails to bend to alternative drop-ins where appropriate, we can embrace a much larger community for the good of all.

In other words, just because you like reggae and I like Swedish pop music doesn't mean we can't bake a cake together. Even suits and punk rockers can have a good time together if they decide to.

Sharing the same sensibilities

I think what really brought this change around was the realization that we largely share the same sensibilities about code. That we're all fundamentally Rubyists with very similar views about the big picture. That the rift in many ways was a false one. Founded on lack of communication and a mistaken notion that because we care about working on different things, we must somehow be in opposition.

But talking to Yehuda, Matt, Ezra, Carl, Daniel, and Michael, I learned — as did we all — that there are no real blockbuster oppositions. They had been working on things that they cared about which to most extends were entirely complimentary to what Jeremy, Michael, Rick, Pratik, Josh, and I had been working on from the Rails core.

Once we realized that, it seemed rather silly to continue the phantom drama. While there's undoubtedly a deep-founded need for humans to see and pursue conflict, there are much bigger and more worthwhile targets to chase together rather than amongst ourselves. Yes, I'm looking at you J2EE, .NET, and PHP :D.

So kumbaja motherfuckers and merry christmas!

We have 24" and 30" pairing stations, an intern, freshly roasted coffee delivered weekly, a fridge stocked with caffeine, more caffeine, beer, and Dave's Insanity sauce. We have cool t-shirts and all manner of radiators up on the walls, whiteboards and the Beta Brite. Music is served by our CI/Music server.

The bookshelves are stocked with reference materials, the occasional bottle of scotch, and recommended readings. On the list of recommended readings we've got The Carpet Makers, The Company, The Omnivore's Dilemma, The Eyre Affair, Behind Closed Doors, Bloodsucking Fiends and The Deadline, Men In Hats, Volume I, Don't Make me Think, The Insane are Running the Asylum.

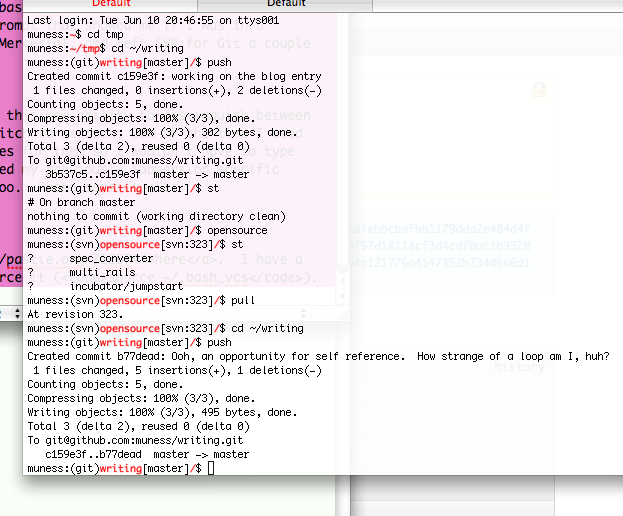

Our favorite radiator is the Friday radiator. Fridays are special: we don't do billable work. Instead we open source, blog (self reference makes this blog entry extra cool), watch educational movies, play Wii on the projector. Here's today's:

Oh, let's not forget the buckets of mice.

This blog entry inspired by another excellent business card cartoon from Hugh Macleod.

Update: I've since updated and moved this script. See my new blog post.

I had a chance to pair with Rob today. On his console window, I noticed something I wanted: his bash prompt had an indication that he was in a Git repository along with the branch he was on. Later, after he'd left, I found myself wondering how that worked and that I simply had to have it, and I wasn't willing to wait a whole day to figure out how he'd done it. Patience is not a virtue. (In that spirit, click here for the code if you're too impatient to read the rest of this blog entry which I spent countless hours writing. Uphill both ways. In the snow.)

A few minutes of googling turned up a blog entry with relevant bash fu. The code there was for changing the prompt such that it indicated whether you were using svn, git, svk or Mercurial along with some repository metadata. A couple of minutes later (it took a while to copy and paste the code since it was interweaved with comments), I found out it didn't work on Leopard. readlink turned out to be the culprit. Instead of figuring out why it wasn't working, I replaced it with something simpler (specifically I used: base_dir=`cd $base_dir; pwd`). Voila, things worked: I now had a hella cool prompt that showed me if I was in a Subversion or a Git repository - I never used Mercurial, and left SVK for Git a couple of months ago. Yay.

Being who I am I had to do something more with this new ability to distinguish between Subversion and Git. And I knew exactly which itch to scratch: 90% of the time I use the same scm commands. I even had shell aliases for them so I wouldn't have to type them. A few minutes later I'd converted my previously Subversion specific aliases to generic ones that worked with Git too. Screenshot says it all:

If you want the same, download the script as ~/.bash_vcs and add source ~/.bash_vcs at the end of your ~/.bash_profile.

There are lots of great JavaScript libraries out there. Prototype is one of the best and it ships along Rails as the default choice for adding Ajax to your application.

Does that mean you have to use Prototype if you prefer something else? Absolutely not! Does it mean that it's hard to use something else than Prototype? No way!

It's incredibly easy to use another JavaScript library with Rails. Let's say that you wanted to use jQuery. All you would have to do is add the jQuery libraries to public/javascripts and include something like this to the

in your layout to include the core and ui parts:<%= javascript_include_tag "jquery", "jquery-ui" %>

Then say you have a form like the following that you want to Ajax:

<% form_for(Comment.new) do |form| %>

<%= form.text_area :body %>

<%= form.submit %>

<% end %>

By virtue of the conventions, this form will have an id of new_comment, which you can decorate with an event in, say, application.js with jQuery like this:

$(document).ready(function() {

$("#new_comment").submit(function() {

$.post($(this).attr('action') + '.js',

$(this).serializeArray(), null, 'script');

return false;

});

});

This will make the form submit to /comments.js via Ajax, which you can then catch in the PostsController with a simple format alongside the HTML response:

def create

@comment = Post.create(params[:comment])

respond_to do |format|

format.html { redirect_to(@comment) }

format.js

end

end

The empty format.js simply tells the controller that there's a template ready to be rendered when a JavaScript request is incoming. This template would live in comments/create.js.erb and could look something like:

$('#comments').append(

'<%= escape_javascript(render(:partial => @comment)) %>');

$('#new_comment textarea').val("");

$('#<%= dom_id(@comment) %>').effect("highlight");

This will append the newly created @comment model to a dom element with the id of comments by rendering the comments/comment partial. Then it clears the form and finally highlights the new comment as identified by dom id "comment_X".

That's pretty much it. You're now using Rails to create an Ajax application with jQuery and you even get to tell all the cool kids that your application is unobtrusive. That'll impress them for sure :).

Rails loves all Ajax, not just the Prototype kind

This is all to say that the base infrastructure of Rails is just as happy to return JavaScript made from any other package than Prototype. It's all just a mime type anyway.

Now if you don't want to put on the unobtrusive bandana and instead would like a little more help to define your JavaScript inline, like with remote_form_for and friends, you can have a look at something like jRails, which mimics the Prototype helpers for jQuery. There's apparently a similar project underway for MooTools too.

So by all means use the JavaScript library that suits your style, but please stop crying that Rails happens to include a default choice. That's what Rails is. A collection of default choices. You accept the ones where you don't care about the answer or simply just agree, you swap out the ones where you differ.

Update: Ryan Bates has created a screencast that shows you how to do the steps I outlined above and more.

See the Rails Myths index for more myths about Rails.

Ruby on Rails has been around for more than five years. It's only natural that the public perception of what Rails is today is going to include bits and pieces from it's own long history of how things used to be.

Many things are not how they used to be. And plenty of things are, but got spun in a way to seem like they're not by people who had either an axe to grind, a competing offering to push, or no interest in finding out.

So I thought it would be about time to set the record straight on a number of unfounded fears, uncertainties, and doubts. I'll be going through these myths one at the time and showing you exactly why they're just not true.

This is not really to convince you that you should be using Rails. Only you can make that choice. But to give you the facts so you can make your own informed decision. One that isn't founded in the many myths floating around.

It used to be somewhat inconvenient to deal with UTF-8 in Rails because Ruby's primary method of dealing with them was through regular expressions. If you just did a naïve string operation, you'd often be surprised by results and think that Ruby was somehow fundamentally unable to deal with UTF-8.

Take the string "Iñtërnâtiônàlizætiøn". If you were to do a string[0,2] operation and expected to get the two first characters back, you'd get "I\303" because Ruby operated on the byte level, not the character level. And UTF-8 characters can be multibyte, so you'd only get 1.5 characters back. Yikes!

Rails dealt with this long ago by introducing a character proxy on top of strings that is UTF-8 safe. Now you can just do s.first(2) and you'll get the two first characters back. No surprises. Everything inside of Rails uses this, so validations, truncating, and what have you is all UTF-8 safe.

Not only that, but we actually assume that all operations are going to happen with UTF-8. The default charset for responses sent with Rails is UTF-8. The default charset for database operations is UTF-8. So Rails assumes that everything coming in, everything going out, and all that's being manipulated is UTF-8.

This is a long way of saying that Rails is perfectly capable of dealing with all kinds of international texts that can be described in UTF-8. The early inconveniences of Ruby's regular expression-based approach has long been superseded. You need no longer worry that Rails doesn't speak your language. Basecamp, for example, has content in some 70+ languages at least. It works very well.

But what about translations and locales?

It was long a point of contention that Rails didn't ship with a internationalization framework in the box. There has, however, long been a wide variety of plugins that added this support. There was localize, globalize, and many others. Each with their own strengths and tailored to different situations.

All these plugins have powered Rails applications in other languages than English for a long time. Some made it possible to translate strings to multiple languages, others just made Rails work well for one other given language. But whatever your translation need was, there was probably a plugin out there that did it.

But obviously things could be better and with Rails 2.2 we've made them a whole lot more so. Rails 2.2 ships with a simple internationalization framework that makes it silly easy to do translations and locales. There's a dedicated discussion group, wiki, and website for getting familiar with this work. I've been using it in a test with translating Basecamp to Danish and really like what I'm seeing.

So in summary, Rails is very capable of making sites that need to be translated into many different locales. Before Rails 2.2, you'd have to use one of the many plugins. After Rails 2.2, you can use what's in the box for most cases (or add additional plugins for more exotic support).

Don't forget about time zones!

Dealing well with content in UTF-8 and translating your application into many languages goes a long way to make your application ready for the world, but most sites also need to deal with time. When you deal with time in a global setting, you also need to deal with time zones.

I'm incredibly proud of the outstanding work that Geoff Buesing lead for the implementation of time zones in Rails 2.1. It's amazing how Geoff and team were able to reduce something so complex to something so simple. And it shows the great power of being an full-stack framework. Geoff was able to make changes to Rails, Action Pack, and Active Record to make the entire experience seamless.

To lean more about time zones in Rails, see Geoff's tutorial or watch the Railscast on Time Zones.

See the Rails Myths index for more myths about Rails.

I've talked to lots of PHP and Java programmers who love the idea and concept of Rails, but are afraid of stepping in because of Ruby. The argument goes that since they already know PHP or Java, that it would be less work to just pick one of the Rails knockoffs in those languages. I really don't think so.

Ruby is actually an amazingly simple language to pickup the basics on. Yes, there's a lot of depth in the meta programming corners, but you really don't need to go there to get stuff done. Certainly not to get going. The base mechanics of getting productive takes much shorter than you likely think.

After all, Ruby is neither LISP nor Smalltalk. It's not a completely new and alien world if you're coming from PHP or Java. Lots of concepts and constructs are the same. The code even looks similar in many cases, just stated more succinctly.

Learn Ruby in the time it would take to learn a framework

I'd argue that most programmers could get up and running in Ruby in about the same time it would take them to learn another framework in their current language anyway. I know it sounds a lot more scary to learn a whole new language rather than just another framework, but it really isn't.

The number one piece of feedback I get from people who dreaded the jump but did it anyway is: Why didn't I do this sooner?

Learn while doing something real that matters to you

Also, speaking from my own experience learning Ruby, I'd actually recommend trying to do something real. Don't just start with the basics of the language in a vacuum. Pick something you actually want done and just start doing it one step of the time. You'll learn as you go along and you'll have to motivation to keep it up because stuff is coming alive.

So don't write off Rails because you don't know Ruby. Your fears of starting from scratch again will quickly make way for the joy of the new language and you'll get to use the real Rails as a reward. Come on in, the water is fine!

See the Rails Myths index for more myths about Rails.

(If you don't want to bother with the history lesson, just skip straight to the answer)

Rails has traveled many different roads to deployment over the past five years. I launched Basecamp on mod_ruby back when I just had 1 application and didn't care that I then couldn't run more without them stepping over each other.

Heck, in the early days, you could even run Rails as CGI, if you didn't have a whole lot of load. We used to do that for development mode as the entire stack would reload between each request.

We then moved on to FCGI. That's actually still a viable platform. We ran for years on FCGI. But the platform really hadn't seen active development for a very long time and while things worked, they did seem a bit creaky, and there was too much gotcha-voodoo that you had to get down to run it well.

Then came the Mongrel

Then came Mongrel and the realization that we didn't need Yet Another Protocol to let application servers and web servers talk together. We could just use HTTP! So packs of Mongrels were thrown behind all sorts of proxies and load balancers.

Today, Mongrel (and it's ilk of similar Ruby-based web servers such as Thin and Ebb) still the predominate deployment environment. And for many good reasons: It's stable, it's versatile, it's fast.

The paradox of many Good Enough choices

But it's also a jungle of options. Which web server do you run in front? Do you go with Apache, nginx, or even lighttpd? Do you rely on the built-in proxies of the web server or do you go with something like HAProxy or Pound? How many mongrels do you run behind it? Do you run them under process supervision with monit or god?

There are a lot of perfectly valid, solid answers from those questions. At 37signals, we've been running Apache 2.2 with HAProxy against monit-watched Mongrels for a few years. When you've decided on which pieces to use, it's actually not a big deal to set it up.

But the availability of all these pieces that all seem to have their valid arguments lead to a paradox of choice. When you're done creating your Rails application, I can absolutely understand why you don't also want to become an expert on the pros and cons of web servers, proxies, load balancers, and process watchers.

And I think that's where this myth has its primary roots. The abundance of many Good Enough choices. The lack of a singular answer to How To Deploy Rails. No ifs, no buts, no "it depends".

The one-piece solution with Phusion Passenger

That's why I was so incredibly happy to see the Phusion team come out of nowhere earlier this year with Passenger (aka mod_rails). A single free, open source module for Apache that brought mod_php-like ease of deployment to Rails.

Once you've completed the incredibly simple installation, you get an Apache that acts as both web server, load balancer, application server and process watcher. You simply drop in your application and touch tmp/restart.txt when you want to bounce it and bam, you're up and running.

But somehow the message of Passenger has been a little slow to sink in. There's already a ton of big sites running off it. Including Shopify, MTV, Geni, Yammer, and we'll be moving over first Ta-da List shortly, then hopefully the rest of the 37signals suite quickly thereafter.

So while there are still reasons to run your own custom multi-tier setup of manually configured pieces, just like there are people shying away from mod_php for their particulars, I think we've finally settled on a default answer. Something that doesn't require you to really think about the first deployment of your Rails application. Something that just works out of the box. Even if that box is a shared host!

In conclusion, Rails is no longer hard to deploy. Phusion Passenger has made it ridiculously easy.

See the Rails Myths index for more myths about Rails.

Zed Shaw's infamous meltdown showed an angry man lashing out at anything and everything. It made a lot of people sad. It made me especially sad because this didn't feel like the same Zed that I had dinner with in Chicago or that I had talked to so many times before. I actually thought he might be in real trouble and in need of real help, but was assured by third party that he wasn't (Zed never replied to my emails after publishing).

But Zed's state of mind isn't really what this is about. This is about the one factual assault he made against Rails that despite being drenched in unbelievable bile somehow still stuck to parts of the public conscious.

The origin of the claim

Zed insinuated that it's normal for Rails to restart 400 times/day because Basecamp at one point did this with a memory watcher that would bounce its Mongrels FCGIs when they hit 160MB 250MB. These FCGIs would then gracefully exit after the current request and boot up again. No crash, no lost data, no 500s.

But still an inconvenience, naturally. Nobody likes a memory leak. So I was happy when a patch emerged that fixed it and we could stop doing that. I believe the fix appeared some time in 2006. So even when Zed published his implosion at the end of 2007, this was already ancient history.

Yet lots of people didn't read it like that. I've received more than a handful of reports from people out talking Rails with customers who pull out the Zed rant and say that their consultants can't use Rails because it reboots 400 times/day. Eh, what?

Fact: Rails doesn't explode every 4 minutes

So let's make it clear once and for all: Rails doesn't spontaneously combust and restart itself. If we ever have an outright crash bug that can take down an entire Rails process, it's code red priority to get a fix out there.

A Rails application may of course still leak memory because of something done in user space that leaks. Just like an application on any other platform may leak memory.

Update: Zed points out that the leak was occurring while Basecamp was still on FCGI, not Mongrel, which is correct. I don't know how that makes the story any different (it was the fastthread fix that stopped the leak and our minor apps were on Mongrel with leaks too), but let's definitely fix the facts.

See the Rails Myths index for more myths about Rails.